(Housekeeping notes: This will be the last Shell Game post of the year, and I wanted to take a moment to thank everyone who’s listened, read, shared, subscribed—especially PAID subscribers—and otherwise supported the show and newsletter this year. It’s been a ride since we put out the first episode back in July. This week we landed on another best-of-the-year list at The Guardian, and it looks like we’ll end the year still sitting in or around the top 10 technology podcasts on Apple, where we’ve stayed almost since launch. That feels truly extraordinary for an independent show with six episodes made by three people and marketed by word of mouth, and we’re grateful.

Also: last week I wrote an essay for the legendary audio storytelling site, The Transom, about what AI means for podcasting and audio journalism, if you want to check it out. There’ll be more fun stuff in the new year, including of course, sometime in 2025, a second season of Shell Game that we’re working on now. Happy holidays, and I hope you’ll stay with us.)

I’m not a big fan of journalists wading into the prediction business. For me, the job is finding, reporting, and telling stories that reveal something about the world as it is. At their best—particularly in the case of technology reporting—these stories can also illuminate where things might be going. But the “ten predictions for 2025’s biggest AI [or media, or political] developments” racket, whatever value the market might find in it, has always seemed to me a separate gig, and a dubious one to mix with the work of reporting. That said, when we set out to make Shell Game we did have to make one significant assumption about the future, if not a necessarily a prediction. Namely, that AI voice agents were going to proliferate in a variety of forms, some difficult to predict, and that they’d start to become part of our lives in the coming years.

For better or worse, we’ve seen this prove out over just the months since the end of Season 1, with AI agents deployed right at the heart of the questions we explored in the show. A few examples:

Back in the spring and early summer, I was excited to get even a couple of generative AI-based scam calls to the line I’d set up. Now more than half of what is coming in on a given day are typically AI agents. AI voice impersonation scams, meanwhile, have exploded worldwide.

Whereas eight months ago it felt a little… demented to put two AI Evan’s in conversation with each other, by mid-December Google’s NotebookLM had done it millions of times.

To some folks back in July, the idea of sending “digital twins” to work meetings seemed a thing of the distant future—or no future at all. Now Microsoft is preparing to roll out voice cloning in its Teams apps, so that your digital twin can hold meetings in nine other languages. Companies are already moving to combine video avatars and voice cloning, for an even weirder digital twin experience.

Underlying our assumption about the coming proliferation of voice agents was another one: namely, that once these technologies were unleashed, people would find uses for them that their creators never intended. We’ve seen this too, with NotebookLM. Already, its podcast feature is enabling what I once called “an endless supply of made-up garbage” but writer Max Read more sharply branded as “AI slop.”

Just a few weeks ago, hundreds of millions of Spotify listeners were surprised to find themselves presented with a NotebookLM-generated AI podcast summarizing their annual “Spotify Wrapped” results. One may or may not consider this slop, although Reddit commentary seemed to be running toward “AI podcast sucks.” But NotebookLM voices are also now being smuggled into all varieties of undeniable slop on YouTube.

The dominant AI chatbot makers themselves—Google, Anthropic, and OpenAI—have been busy integrating voice into their apps, letting users converse directly with their large language models, encouraging us to view them as something more than just text boxes. Just last week, ChatGPT launched the phone number 1-800-CHAT-GPT, a voice-agent-powered line which anyone can call for free up to fifteen minutes a month. (Naturally, I took the opportunity to have AI Evan ring it up—that’s the conversation at the top of this post. The pair’s endless “I can’t wait to see what we’ll create and accomplish” back and forth had me cracking up about AI small talk in a way I hadn’t in months.)

All of which brings me to another experience I had—or rather, my AI had—with OpenAI’s voice technology for ChatGPT. As we mentioned in Episode 5 of the show, developers and startups working with AI voice agents—like the people building and using platforms like Vapi and RetellAI, the calling services I used in the show—had been waiting desperately for OpenAI to launch what they called “advanced voice mode” since they’d demonstrated it in May. (Vapi, incidentally, recently announced that it had raised $20 million to expand its voice agent business.) According to OpenAI’s demos, the new technology would enable “voice to voice” AI—chatbots and agents that could take voice inputs directly and respond without having to translate that voice to text and back again. This promised lower latency, and even a better ability to detect the emotions conveyed by a voice’s volume and pitch.

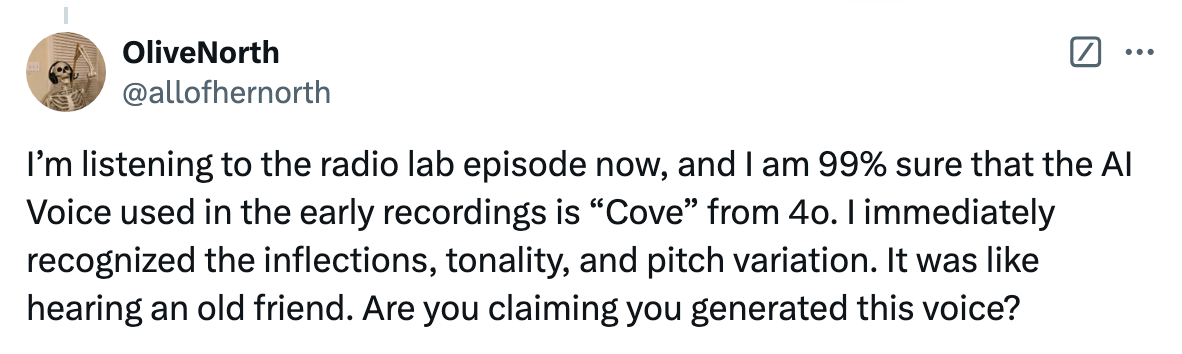

In the meantime, OpenAI had released what I guess you’d call “regular mode” voices that you could talk to in the company’s apps. I know this because when Shell Game came out, I began hearing from listeners that one of these voices, “Cove,” sounded very much like the AI cloned version of me. Some even suggested that I’d just used Cove in the show, rather than cloning my own voice, so certain were they that the two voices were the same:

Hard to imagine how I would have pulled this off. But in any case, since I started making Shell Game before Cove was released, I have an alibi.

Other listeners suggested that Cove might have instead appropriated my voice. This, to me, also seemed extremely far-fetched. OpenAI does have a poor reputation on this front, having been forced to suspend its use of one synthetic voice under threat of lawsuit from Scarlett Johansson. But I feel I and my voice lack the celebrity profile that would make it worth lifting, even by the most sticky-fingered corporations among us.

Still, when I gave it a listen, Cove did have some AI Evan Ratliff elements to it. So I decided to put it to the ultimate test, and have the two voices converse with each other: